The Rise of Context Engineering in AI

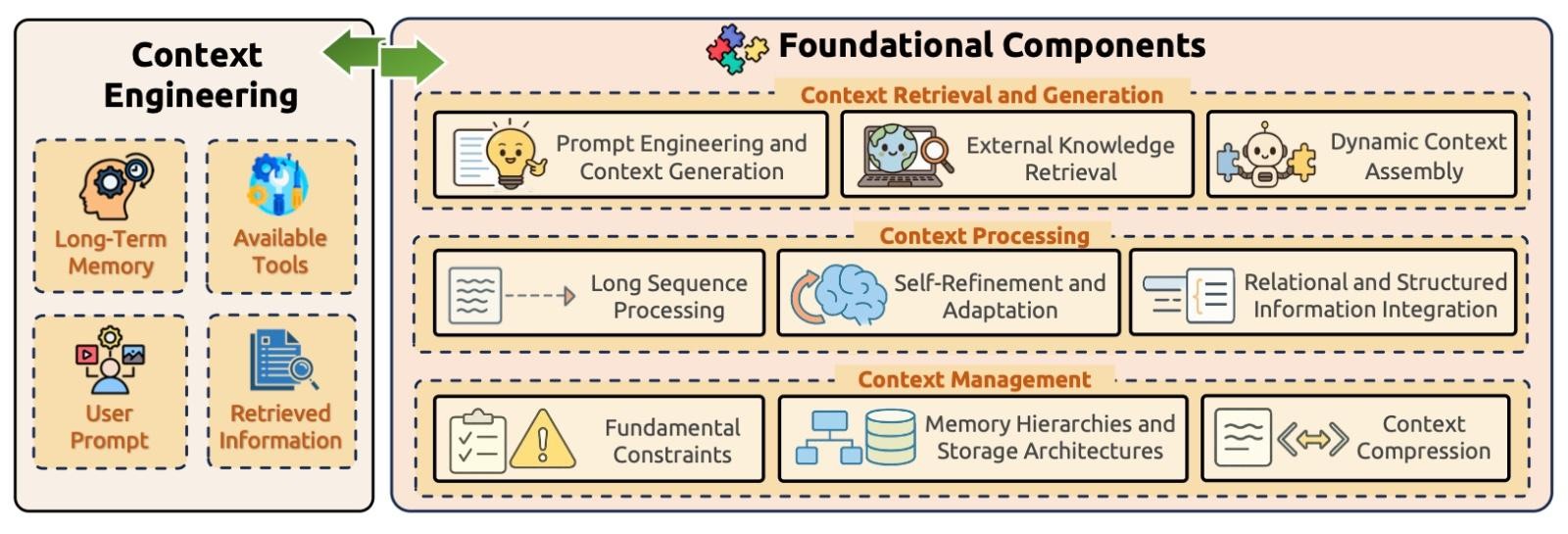

From Prompt Crafting to System Design: The Rise of Context Engineering in AI For years, the magic behind large language models (LLMs) like ChatGPT seemed to lie in prompt engineering—figuring out how to phrase a question or instruction just right. But as these systems have matured and grown more integrated into complex workflows, a deeper,…